NuGet package restore is a great feature as it means you no longer need to check in packages to source control. The only downside is that you need to have a internet connection to "restore" the packages.

This post is going to help address that issue by showing how you can keep your downloaded NuGet packages in a central location to save space and provide offline access.

Warning: this works for me but it isn't a supported use case, so YMMV + use at your own risk.

Once this is done any packages missing from your packages folder will be automatically downloaded when you build the solution. Try it out.., delete any folder under the "packages" directory and rebuild the solution. NuGet will automatically download the missing packages.

To do this, first create an environment variable called PackagesDir

Next modify the "NuGet.targets" (this file is added to your solution in the first step) and add the attribute: Condition=" '$(PackagesDir)' == '' " to the two lines, as shown below (NuGet.targets):

NB: Commit all the files under the .nuget folder to source control.

You might need to restart visual studio but now you should have your packages stored in your preferred central location.

This relies on a powershell script to copy the .nupkg (zipped packages) which exist in the sub directories up to the parent ( in my case its: C:\ddrive\dev\_nuget_repository\).

Save the following power shell command to a file called "_PackageCopier.ps1" into the _nuget_repository directory :

Now when you double click the _update_from_repository.bat your local NuGet repository will be updated with all the NuGets you are using from all your solutions.

That's it. You should now have a central repository for all NuGet packages and the a local repository of all the packages you have used.

If would be great if the NuGet.targets file could be modified permanently globally to respect an environment variable!

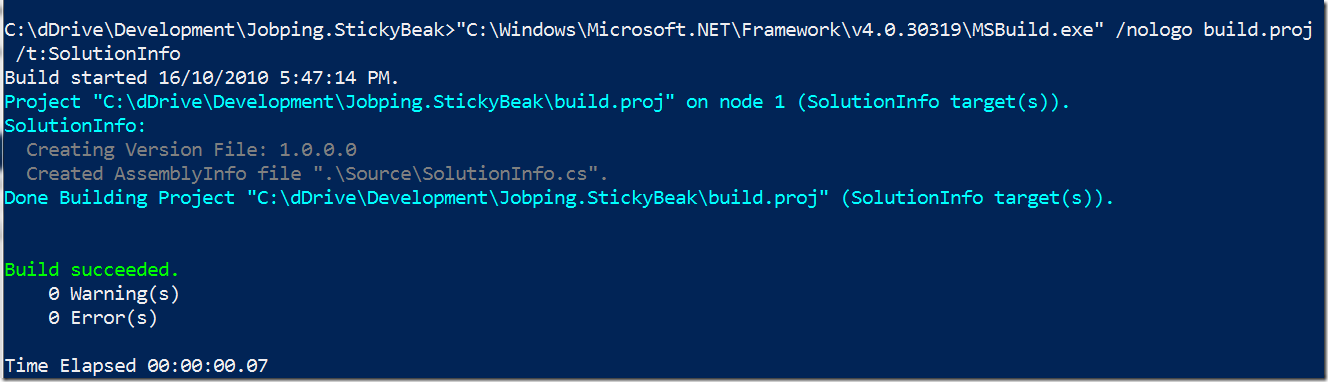

Below is a screen grab of what my _nuget_repository directory looks like:

This post is going to help address that issue by showing how you can keep your downloaded NuGet packages in a central location to save space and provide offline access.

Warning: this works for me but it isn't a supported use case, so YMMV + use at your own risk.

Firstly, enable NuGet package restore on your solution

To enable package restore (make sure you have the NuGet.2.0 extension installed) simply right click on your solution and select "Enable NuGet Package Restore"Once this is done any packages missing from your packages folder will be automatically downloaded when you build the solution. Try it out.., delete any folder under the "packages" directory and rebuild the solution. NuGet will automatically download the missing packages.

Next, Set up a central package repository

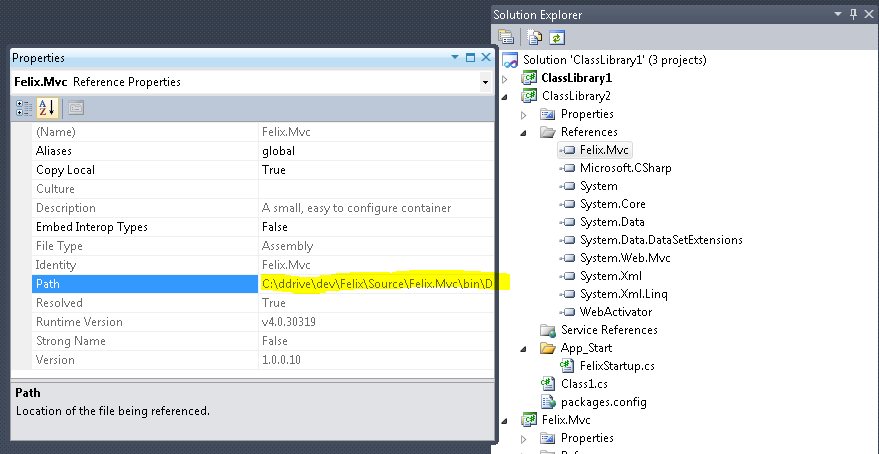

Now to minimize the number of items we need to download (and disk space) we are going to configure NuGet to use a single location for the packages. Normally NuGet will store the packages in a "packages" folder at the solution level but this means there is a lot of duplication of files. I have setup a global location for my packages at "C:\ddrive\dev\_nuget_repository\packages" but this can vary between computers as it's based on an environment variable.To do this, first create an environment variable called PackagesDir

Next modify the "NuGet.targets" (this file is added to your solution in the first step) and add the attribute: Condition=" '$(PackagesDir)' == '' " to the two lines, as shown below (NuGet.targets):

NB: Commit all the files under the .nuget folder to source control.

You might need to restart visual studio but now you should have your packages stored in your preferred central location.

Finally, Lets set up our own NuGet feed based on the packages in this central repository.

Notice how I set a extra "packages" directory to my location. This is because the packages directory will hold all the extracted packages created by NuGet and visual studio. We will use the parent directory as the NuGet Feed directory.This relies on a powershell script to copy the .nupkg (zipped packages) which exist in the sub directories up to the parent ( in my case its: C:\ddrive\dev\_nuget_repository\).

Save the following power shell command to a file called "_PackageCopier.ps1" into the _nuget_repository directory :

Now save the following bat file into the same directory (called "_update_from_repository.bat" ):"" "Package Copier Starting..........." "" if($args.length -ne 2) { $source = resolve-path "..\..\packages" $destination = resolve-path "..\..\_nuget_repository\" } else { $source = $args[0] $destination = $args[1] } if( -not (Test-Path -PathType Container $source)) { throw "source directory does not exist, source: " + $source } if( -not (Test-Path -PathType Container $destination)) { throw "Destination directory does not exist!! " + $destination } Get-ChildItem -Recurse -Filter "*.nupkg" $source ` | Where-Object { -not( Test-Path -PathType Leaf (Join-Path $destination $_.Name)) } ` | Copy-Item -Destination $destination -Verbose `

If you are errors running powershell see stackoverflow. Basically I have set my execution policy to unrestricted for both 32bit and 64bit powershell prompts.powershell.exe .\_PackageCopier.ps1 "packages" "." pause

Now when you double click the _update_from_repository.bat your local NuGet repository will be updated with all the NuGets you are using from all your solutions.

Setup the local repository in Visual Studio:

That's it. You should now have a central repository for all NuGet packages and the a local repository of all the packages you have used.

If would be great if the NuGet.targets file could be modified permanently globally to respect an environment variable!

Below is a screen grab of what my _nuget_repository directory looks like: